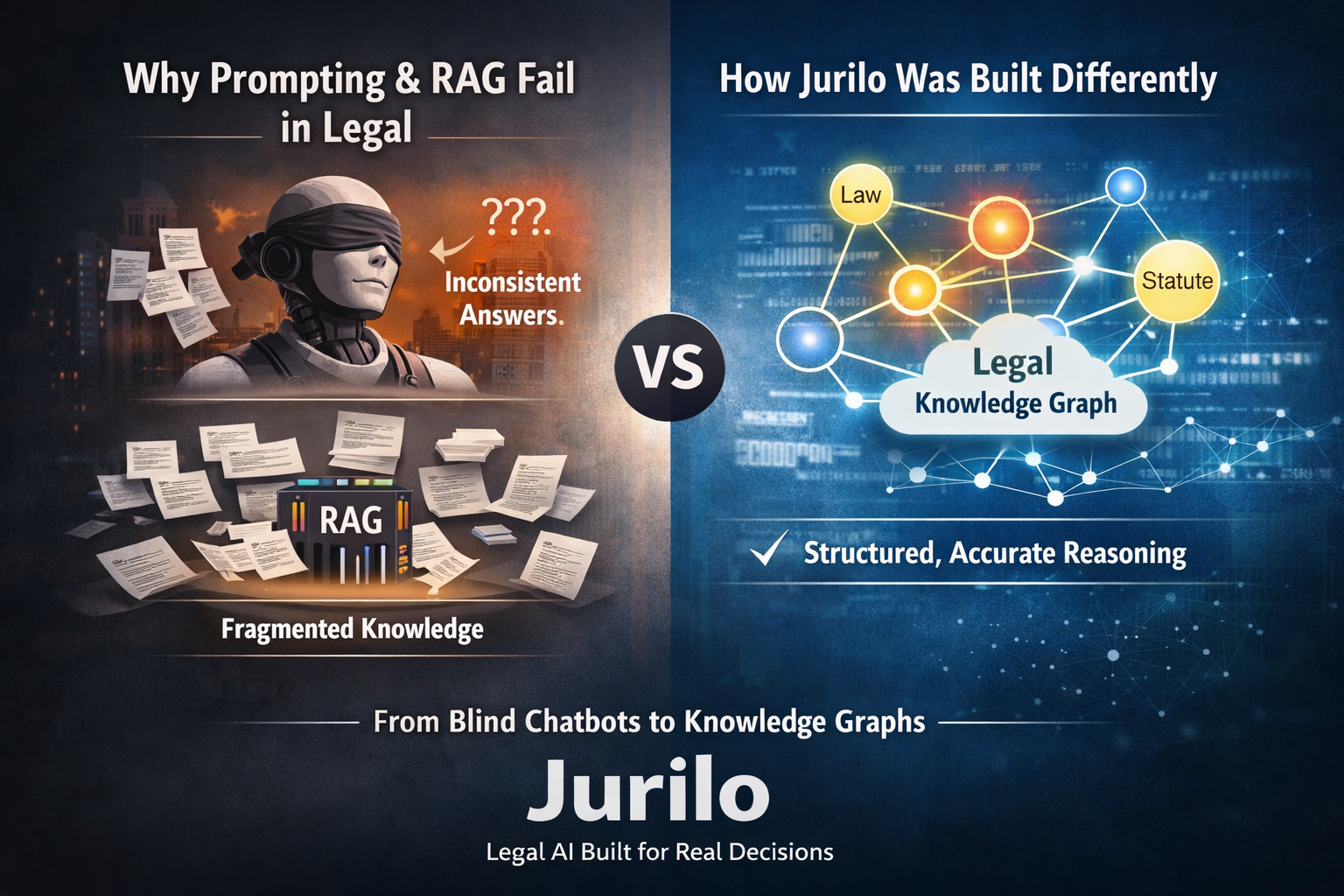

Why Prompting and RAG Fail in Legal — and Why Jurilo Was Built Differently

By Lawise.ai

Legal AI has reached a critical inflection point.

While generic AI tools and many so-called “legal chatbots” perform well in demos, they consistently fail where it matters most: accuracy, consistency, and accountability. In regulated, high-risk domains such as law, fluent language alone is not sufficient.

That is why Jurilo was designed fundamentally differently — based on a clear understanding of why prompting and RAG break down in legal reasoning, and what must replace them.

The Illusion of Prompting in Legal AI

Prompting works when:

- Tasks are exploratory or creative

- Approximate answers are acceptable

- Errors have limited consequences

Legal decision-making meets none of these criteria.

In law, the same question must produce the same answer, depending on:

- Jurisdiction

- Applicable statutes

- Case law

- Exceptions

- Temporal validity

Prompting relies on linguistic probability, not legal correctness.

Why prompting fails in legal contexts

- No legal memory: the model does not know which rules apply

- No hierarchy: statutes, regulations, and case law are mixed indiscriminately

- No versioning: outdated and current law are blended

- No accountability: the model cannot explain why an answer is correct

Prompt engineering improves phrasing — not legal understanding.

Why RAG Is Not Sufficient Either

Retrieval-Augmented Generation (RAG) is often presented as the solution to hallucinations.

In legal reasoning, it is not.

What RAG actually does

- Retrieves text fragments from documents

- Injects them into a prompt

- Lets the LLM summarize or rephrase

This supports citation — not reasoning.

Structural limits of RAG in legal use cases

- Chunking breaks legal logic

Legal meaning depends on structure, cross-references, and exceptions — not isolated paragraphs. - Retrieval is not relevance

The most similar text is often not the legally decisive one. - Conflicting rules remain unresolved

RAG cannot determine which rule overrides another. - Inconsistent answers

The same question can produce different outputs.

RAG may reduce some hallucinations — but it cannot eliminate them.

Legal AI Requires Structure, Not Just Text

Law is not a document problem. It is a system problem.

Legal reasoning requires:

- Explicit relationships

- Rule hierarchies

- Conditional logic

- Jurisdictional boundaries

- Temporal validity

These cannot be inferred reliably from raw text. They must be modeled explicitly.

How Jurilo Was Built

Jurilo is not a chatbot. It is a legal reasoning system.

1. Trained on curated, verified legal data

- Structured, versioned Swiss statutes

- Verified interpretations from legal partners

- Case law with outcomes and reasoning paths

No scraped web noise. No generic summaries.

2. Explicit Legal Context

Jurilo does not guess context.

It explicitly models:

- Jurisdiction

- Legal domain

- Role perspective (HR, employer, employee, fiduciary)

- Temporal validity

Ambiguity is removed before reasoning begins.

3. Graph-Based Legal Reasoning

At its core, Jurilo uses a legal knowledge graph.

Instead of:

Question → Prompt → Text

Jurilo follows:

Question → Context resolution → Graph traversal → Rule evaluation → Structured answer

- Laws are nodes

- Exceptions are edges

- Dependencies are explicit

- Conflicts are resolved deterministically

Language explains the result — it does not create it.

Why Hallucinations Drop to Near Zero

Hallucinations occur when models must invent missing structure.

Jurilo does not need to invent:

- The structure already exists

- Reasoning paths are constrained

- Outputs are validated against known rules

If something is unknown, Jurilo states this clearly.

That is how hallucinations move from “likely” to near zero.

Why This Matters

For HR managers, SMEs, fiduciaries, and legal teams:

- One wrong answer can trigger legal risk

- Inconsistency erodes trust

- “Sounds plausible” is unacceptable

Legal AI must behave like:

- A junior lawyer with perfect recall

- A compliance system with explanations

- A decision-support tool — not a chatbot

That is what Jurilo was designed to be.

The Bottom Line

Prompting and RAG are useful techniques — not legal systems.

Legal AI requires:

- Structured knowledge

- Explicit context

- Deterministic reasoning

- Verifiable outputs

Legal AI does not need better prompts. It needs better foundations.